Building an ETL pipeline with Airflow and ECS

ETL is an automated process that takes raw data, extracts and transforms the information required for analysis, and loads it to a data warehouse. There are different ways to build your ETL pipeline, on this post we’ll be using three main tools:

- Airflow: one of the most powerful platforms used by Data Engineers for orchestrating workflows.

- AWS ECS/Fargate: a container management service that makes it easy to run, stop, and manage your containers.

- AWS s3: AWS simple storage service.

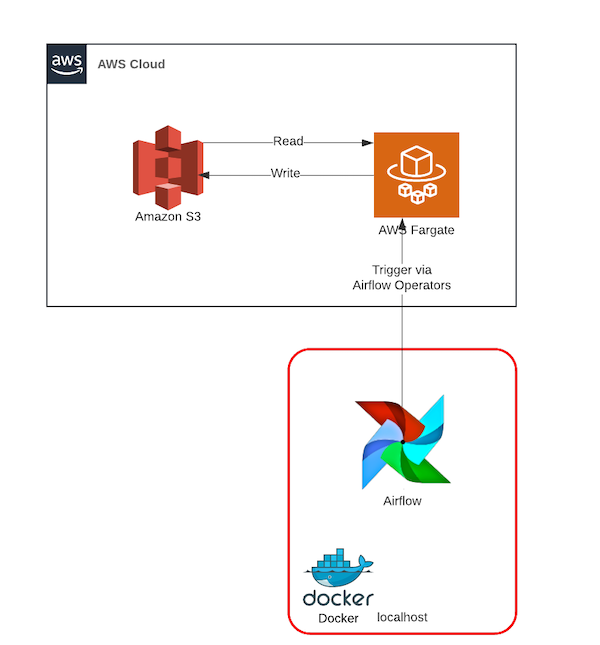

The architecture that we will be building follows the schema bellow:

Architecture schema, using AWS, Docker and Airflow. Designed using Lucid.app

You can check the full blog post on my Medium at Towards Data Science: Building an ETL pipeline with Airflow and ECS