rdbms data modeling

You can find the full code in here

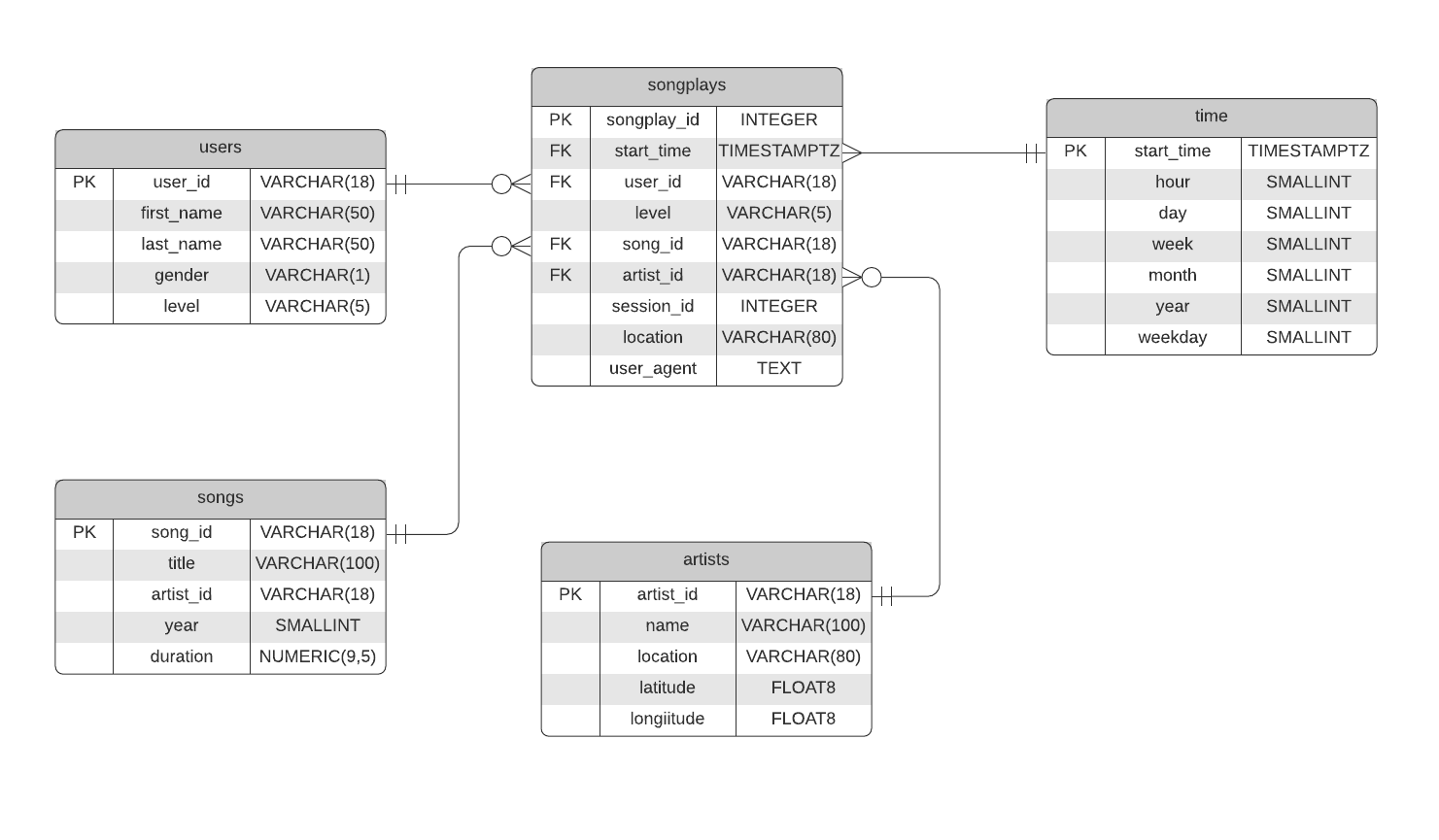

Database Schema

Files

- database.ini: database credentials(host, database, user, password, port)

- create_tables.py: run script to create database tables described on the image above

- etl.py: run script to tranfer all the data from folder

datato database - sql_queries.py: all queries

- etl.ipynb: step-by-step tutorial on how to prepare the data for insertion

Usage

- Starting local PostgreSQL instance:

docker-compose up

You can edit your database credentials in database.ini

- Create the database running:

python create_tables.py -

(optional) Run ETL.ipynb in order to check the full pipeline

- Run

elt.py: it will read and process all the files fromsong_dataandlog_data, loading them into the database

Details

The data insertion is done using three different approaches from psycopg2 library:

- copy_from

- execute(simple insertition statement)

- executemany